🎬 See here Leopold's interview with Dwarkesh Patel

A growing number of researchers and engineers at the forefront of AI development believe that artificial general intelligence (AGI) could arrive as early as 2027. This view is based not on hype, but on consistent patterns across model performance, scaling behavior, compute infrastructure, and capital flows. These experts are observing rapid advances in hardware availability, continual gains in algorithmic efficiency, and unprecedented focus from leading AI labs—all of which are accelerating faster than many anticipated.

Taken together, these developments point to a near-term horizon where machines could match or exceed expert-level performance across a wide range of tasks. This isn’t a speculative scenario decades away—it’s something potentially within reach in just a few years.

Leopold Aschenbrenner refers to this perspective as situational awareness: the capacity to recognize where the technology is actually heading based on empirical signals, and to understand that AGI may arrive well before most institutions are prepared. His argument is that AGI is not a theoretical future milestone—it’s an approaching inflection point that will significantly impact economics, national security, and the structure of global power.

1. Why AGI by 2027 is plausible

Scaling laws and trendlines

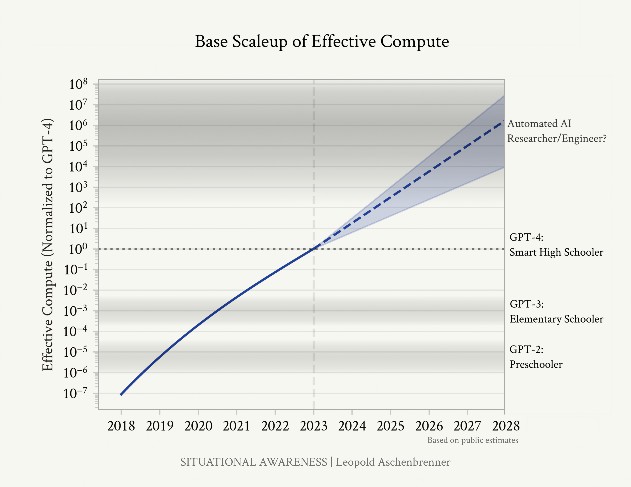

- From 2019 (GPT-2) to 2023 (GPT-4), large language models progressed from generating basic, often error-prone text to performing at near-expert levels on complex academic and professional tasks, including standardized tests and coding benchmarks.

- These improvements were not the result of fundamental theoretical breakthroughs, but came from systematically increasing compute power, expanding model sizes, using more refined datasets, and optimizing training procedures.

- This pattern follows a set of "scaling laws," which show that model performance improves predictably as compute and data increase.

- Aschenbrenner argues that if these scaling trends persist, we can expect 2027-era models to perform at or above the level of top human experts across many domains—effectively meeting a functional definition of AGI.

Effective compute will 100x–1000x

- Training compute for frontier models has grown at a rate of ~0.5 OOMs (orders of magnitude) per year—around 10× annually—outpacing Moore’s Law by a significant margin.

- Public and private investment in GPU clusters and datacenter infrastructure is accelerating. By 2027, models may benefit from 2–3 additional OOMs, representing 100–1000× more effective compute.

Algorithmic efficiency

- Over the past decade, algorithmic improvements have significantly reduced the compute required to reach a given performance level—by several orders of magnitude in some cases. These gains have come from innovations in training methods, model architectures, data utilization, and optimization techniques.

- Aschenbrenner estimates that we could see another 1–3 OOMs (orders of magnitude) of efficiency gains by the end of the decade. These advances would not only enhance what current compute can deliver but also multiply the effects of hardware scaling. This means that even without major breakthroughs in compute infrastructure, smarter algorithms alone could push performance into AGI territory.

“Unhobbling” the models

- Many current models are artificially constrained by weak prompting, short context windows, and limited tools.

- New techniques—such as reinforcement learning from human feedback (RLHF), tool use, agentic scaffolding, and long-context reasoning—dramatically enhance practical capabilities without increasing base model size.

- By “unhobbling” models and deploying them as agents, the same base capabilities can yield much greater real-world utility.

Bottom line: a continuation of current scaling trends, plus existing optimization methods, would be sufficient to reach AGI by 2027.

2. The intelligence explosion

What happens after AGI?

- Once AI systems reach the level of top-tier researchers, they can begin contributing directly to AI research and development, acting as both collaborators and tools in accelerating innovation.

- These systems would be able to analyze experimental results, generate novel ideas, optimize architectures, and run large-scale simulations at unprecedented speed and scale.

- This sets up a feedback loop where advanced AI helps design even better AI, rapidly improving capabilities with each iteration.

- The recursive self-improvement dynamic could lead to an "intelligence explosion," in which AI systems quickly surpass human-level performance across a wide range of domains—scientific, strategic, and technical.

Millions of fast, parallel AI scientists

- In a post-AGI world, organizations may deploy millions of AGI instances in parallel, each operating at accelerated speed.

- This would constitute a research force hundreds of times larger and faster than any human scientific community in history.

- Aschenbrenner argues that this could compress a decade of algorithmic progress into less than a year.

Superintelligence is not far behind

- A few additional OOMs of algorithmic and compute gains beyond AGI could result in systems that are vastly superhuman.

- Such systems could rapidly solve scientific challenges, optimize strategic planning, and develop entirely new technologies.

- The gap between human-level AI and superintelligence may not be decades—it could be measured in months or a few years.

If AGI arrives by 2027, superintelligence could plausibly follow before 2030.

3. The geopolitical stakes

A new arms race

- The implications of superintelligence extend beyond technological innovation—they may determine which nations gain long-term geopolitical control.

- A 1–2 year lead in deploying superintelligent systems could translate into decisive advantages across military operations, economic leverage, intelligence gathering, and cyber capabilities.

- Such an advantage may be difficult, if not impossible, for others to recover from in a competitive, high-stakes global environment.

Lessons from history

- Historical military conflicts demonstrate the importance of technological asymmetry: the Gulf War showed how a few decades’ lead in defense tech can produce decisive victories.

- Similar dynamics may apply in the AI domain, but with the added risk of global-scale consequences.

The stakes for the free world

- If democratic nations lead the transition to AGI, they may be able to shape global norms for safe development, implement enforceable alignment standards, and build coalitions for international governance.

- In contrast, if authoritarian regimes like the CCP take the lead, the likely outcome includes stronger global surveillance systems, centralized control over advanced AI capabilities, and a reduced chance of transparency or global coordination.

- Aschenbrenner argues that U.S. leadership is essential not only for strategic security but also for preserving the possibility of accountable, values-aligned AGI deployment on a global scale.

“Superintelligence is a matter of national security, and the United States must win.”

4. What needs to happen

Security is not on track

- Current AI labs operate with minimal cybersecurity relative to the sensitivity of their work.

- Model weights, training data, and research artifacts—often worth hundreds of millions of dollars—are stored in environments that frequently lack rigorous security protocols.

- These assets remain vulnerable to theft by state actors, cybercriminals, or insider threats, raising concerns not only about intellectual property loss but also about the uncontrolled proliferation of powerful models.

- A shift toward classified, defense-grade information security is urgently needed, including hardened infrastructure, strict access controls, active monitoring, and collaboration with national security agencies to identify and mitigate emerging threats.

The coming “AGI Manhattan Project”

- As AGI approaches, the U.S. government is likely to assume a more direct role in overseeing frontier AI development.

- Aschenbrenner anticipates the creation of a centralized, national-scale AGI effort modeled on the original Manhattan Project.

- This would entail secure facilities, cross-agency collaboration, top-tier talent recruitment, and multi-trillion-dollar funding.

“No startup can handle superintelligence.”

5. Situational awareness is rare

- Despite the evidence and strategic implications, most decision-makers remain unaware of how quickly AI is advancing.

- Even within tech, many leaders view AGI timelines as speculative or distant.

- Aschenbrenner’s message is that situational awareness—recognizing the implications of the current trajectory—is essential for institutions that hope to remain relevant in the years ahead.

“If we’re right about the next few years, we are in for a wild ride.”

Situational Awareness is not merely a forecast—it is a framework for interpreting the present. The trends in compute, model performance, and institutional behavior suggest that AGI is not a multi-decade project. It's a near-term transition that will reshape society, the economy, and international relations.

Whether or not one agrees with every element of Aschenbrenner’s timeline, his core message is difficult to discard: world-changing AI tools are arriving faster than expected, and preparation must begin now.

Quotes

"It is strikingly plausible that by 2027, models will be able to do the work of an AI researcher/engineer. That doesn’t require believing in sci-fi; it just requires believing in straight lines on a graph."

"Look. The models, they just want to learn. You have to understand this. The models, they just want to learn." – ilya sutskever (circa 2015, via Dario Amodei)

"A decade earlier, models could barely identify simple images of cats and dogs; four years earlier, GPT-2 could barely string together semi-plausible sentences. Now we are rapidly saturating all the benchmarks we can come up with. And yet this dramatic progress has merely been the result of consistent trends in scaling up deep learning."

"We are racing through the OOMs extremely rapidly [...]. While the inference is simple, the implication is striking. Another jump like that very well could take us to AGI, to models as smart as PhDs or experts that can work beside us as coworkers. Perhaps most importantly, if these AI systems could automate AI research itself, that would set in motion intense feedback loops."

"If you keep being surprised by AI capabilities, just start counting the OOMs."

"It used to take decades to crack widely-used benchmarks; now it feels like mere months."

"If there’s one lesson we’ve learned from the past decade of AI, it’s that you should never bet against deep learning."

"With each OOM of effective compute, models predictably, reliably get better. If we can count the OOMs, we can (roughly, qualitatively) extrapolate capability improvements. That’s how a few prescient individuals saw GPT-4 coming."

"[...] The upshot is clear: we are rapidly racing through the OOMs. There are potential headwinds in the data wall [...]—but overall, it seems likely that we should expect another GPT-2-to-GPT-4-sized jump, on top of GPT-4, by 2027."

"There’s a very real chance things stall out [...]. But I think it’s reasonable to guess that the labs will crack it, and that doing so will not just keep the scaling curves going, but possibly enable huge gains in model capabilities."

"Scaling up simple deep learning techniques has just worked, the models just want to learn, and we’re about to do another 100,000x+ by the end of 2027. It won’t be long before they’re smarter than us."

"AI progress won’t stop at human-level. Hundreds of millions of AGIs could automate AI research, compressing a decade of algorithmic progress (5+ OOMs) into 1 year. We would rapidly go from human-level to vastly superhuman AI systems."

"Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make." – i. j. good (1965)

"After initially learning from the best human games, AlphaGo started playing against itself—and it quickly became superhuman, playing extremely creative and complex moves that a human would never have come up with."

"The jump to superintelligence would be wild enough at the current rapid but continuous rate of AI progress [...]. But it could be much faster than that, if AGI automates AI research itself."

"Once we get AGI, we won’t just have one AGI. [...] Given inference GPU fleets by then, we’ll likely be able to run many millions of them [...]."

"Get to AGI [...]—and AI systems will become superhuman—vastly superhuman. They will become qualitatively smarter than you or I, much smarter, perhaps similar to how you or I are qualitatively smarter than an elementary schooler."

"Automated AI research could probably compress a human decade of algorithmic progress into less than a year (and that seems conservative)."

"Superintelligence would likely provide a decisive military advantage, and unfold untold powers of destruction. We will be faced with one of the most intense and volatile moments of human history."

"We could see economic growth rates of 30%/year and beyond, quite possibly multiple doublings a year. This follows fairly straightforwardly from economists’ models of economic growth."

"Military power and technological progress has been tightly linked historically, and with extraordinarily rapid technological progress will come concomitant military revolutions."

"The barriers to even trillions of dollars of datacenter buildout in the US are entirely self-made. [...] The clusters can be built in the US, and we have to get our act together to make sure it happens in the US. American national security must come first [...]. If American business is unshackled, America can build like none other (at least in red states)."

"We must be prepared for our adversaries to “wake up to AGI” in the next few years. AI will become the #1 priority of every intelligence agency in the world. In that situation, they would be willing to employ extraordinary means and pay any cost to infiltrate the AI labs."

"The German project had narrowed down on two possible moderator materials: graphite and heavy water. [...] Since Fermi had kept his result secret, the Germans did not have Fermi’s measurements to check against, and to correct the error. This was crucial: it left the German project to pursue heavy water instead—a decisive wrong path that ultimately doomed the German nuclear weapons effort."

"[...] We will face a situation where, in less than a year, we will go from recognizable human-level systems [...] to much more alien, vastly superhuman systems that pose a qualitatively different, fundamentally novel technical alignment problem."

"[...] I expect that within a small number of years, these AI systems will be integrated in many critical systems, including military systems (failure to do so would mean complete dominance by adversaries). It sounds crazy, but remember when everyone was saying we wouldn’t connect AI to the internet? The same will go for things like “we’ll make sure a human is always in the loop!”"

"What makes this incredibly hair-raising is the possibility of an intelligence explosion: that we might make the transition from roughly human-level systems to vastly superhuman systems extremely rapidly, perhaps in less than a year."

"Superintelligence will be the most powerful technology— and most powerful weapon—mankind has ever developed. It will give a decisive military advantage, perhaps comparable only with nuclear weapons."

"Our generation too easily takes for granted that we live in peace and freedom. And those who herald the age of AGI in SF too often ignore the elephant in the room: superintelligence is a matter of national security, and the United States must win."

"At stake in the AGI race will not just be the advantage in some far-flung proxy war, but whether freedom and democracy can survive for the next century and beyond."

"The free world must prevail over the authoritarian powers in this race."

"[...] A healthy lead gives us room to maneuver: the ability to “cash in” parts of the lead, if necessary, to get safety right [...]."

"The national security state will get involved [...] by 27/28, we’ll get some form of government AGI project."

"No startup can handle superintelligence."

"If the government project is inevitable, earlier seems better."

"Now it feels extremely visceral. I can see it. I can see how AGI will be built. [...] I can basically tell you the cluster AGI will be trained on and when it will be built, the rough combination of algorithms we’ll use, the unsolved problems and the path to solving them, the list of people that will matter. I can see it."

"Will we tame superintelligence, or will it tame us? Will humanity skirt self-destruction once more? The stakes are no less."